10分钟学会Pandas

Pandas最初被作为金融数据分析工具而开发出来,在金融领域被广泛使用。Pandas纳入了大量库和一些标准的数据模型,提供了高效地操作大型数据集所需的工具、函数和方法。

本文是针对pandas新手的快速入门学习指南。你可以在 AI量化平台-编写策略 里,一步一步的学习和实践。

# 导入库

import pandas as pd

import numpy as np

Pandas数据结构

主要数据结构:Series和DataFrame,Series是一种类似于一维数组的对象,它由一组数据以及一组与之相关的数据标签(即索引)组成。DataFrame是一个表格型 的数据结构,可以简单地理解为是由多个具有相同的索引的Series组成。DataFrame是金融数据分析中特别常用的数据结构,也是我们平台最常遇到的数据结构。

# 创建Series,以日期为索引

dates = pd.date_range('2/6/2017', periods=5, freq='D')

s = pd.Series([1,3,5,np.nan,6,],index=dates)

s

2017-02-06 1.0

2017-02-07 3.0

2017-02-08 5.0

2017-02-09 NaN

2017-02-10 6.0

Freq: D, dtype: float64

# 创建DataFrame,以日期为索引

df = pd.DataFrame(np.random.randn(5,4), index=dates, columns=list('ABCD'))

df

| A | B | C | D |

|---|

| 2017-02-06 | -1.738581 | -1.750260 | -0.532282 | -0.409738 |

|---|

| 2017-02-07 | -0.871625 | 0.188268 | -0.689427 | 1.128257 |

|---|

| 2017-02-08 | 1.504632 | -0.229489 | -2.775763 | 1.867307 |

|---|

| 2017-02-09 | -1.753426 | 1.252952 | 0.053105 | 0.870436 |

|---|

| 2017-02-10 | -1.162485 | -0.376172 | 1.548791 | -0.928139 |

|---|

# 获取平台数据

df = D.history_data(['000005.SZA'],start_date='2017-01-06',end_date='2017-01-16',

fields=['open','high','low','close'], groupped_by_instrument=False)

# 将日期列作为索引

df = df.set_index('date')

df

| date | instrument | open | high | low | close |

|---|

| 2017-01-06 | 000005.SZA | 64.502495 | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.205032 | 63.761089 | 62.741653 | 62.927006 |

|---|

| 2017-01-13 | 000005.SZA | 62.927006 | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.185596 | 62.278271 | 56.810387 | 59.683346 |

|---|

查看数据

# 显示DataFrame前几行(默认是5)的数据

df.head()

| date | instrument | open | high | low | close |

|---|

| 2017-01-06 | 000005.SZA | 64.502495 | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.205032 | 63.761089 | 62.741653 | 62.927006 |

|---|

# 显示DataFrame后行的数据

df.tail(2)

| date | instrument | open | high | low | close |

|---|

| 2017-01-13 | 000005.SZA | 62.927006 | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.185596 | 62.278271 | 56.810387 | 59.683346 |

|---|

显示索引、列名以及底层的numpy数据

# 数据索引

df.index

DatetimeIndex(['2017-01-06', '2017-01-09', '2017-01-10', '2017-01-11',

'2017-01-12', '2017-01-13', '2017-01-16'],

dtype='datetime64[ns]', name='date', freq=None)

# 数据列名

df.columns

Index(['instrument', 'open', 'high', 'low', 'close'], dtype='object')

# 底层numpy数据

df.values

array([['000005.SZA', 64.50249481201172, 66.07798767089844,

64.2244644165039, 65.15122985839844],

['000005.SZA', 64.50249481201172, 65.89263916015625,

64.40982055664062, 65.52193450927734],

['000005.SZA', 65.52193450927734, 65.61460876464844,

64.96587371826172, 64.96587371826172],

['000005.SZA', 64.96587371826172, 64.96587371826172,

63.57573699951172, 63.57573699951172],

['000005.SZA', 63.20503234863281, 63.76108932495117,

62.74165344238281, 62.927005767822266],

['000005.SZA', 62.927005767822266, 63.20503234863281,

61.907569885253906, 61.907569885253906],

['000005.SZA', 62.18559646606445, 62.27827072143555,

56.810386657714844, 59.683345794677734]], dtype=object)

# 数据类型

df.dtypes

instrument object

open float32

high float32

low float32

close float32

dtype: object

转置、排序、偏移

# 转置

df.T

| date | 2017-01-06 00: 00: 00 | 2017-01-09 00: 00: 00 | 2017-01-10 00: 00: 00 | 2017-01-11 00: 00: 00 | 2017-01-12 00: 00: 00 | 2017-01-13 00: 00: 00 | 2017-01-16 00: 00: 00 |

|---|

| instrument | 000005.SZA | 000005.SZA | 000005.SZA | 000005.SZA | 000005.SZA | 000005.SZA | 000005.SZA |

|---|

| open | 64.5025 | 64.5025 | 65.5219 | 64.9659 | 63.205 | 62.927 | 62.1856 |

|---|

| high | 66.078 | 65.8926 | 65.6146 | 64.9659 | 63.7611 | 63.205 | 62.2783 |

|---|

| low | 64.2245 | 64.4098 | 64.9659 | 63.5757 | 62.7417 | 61.9076 | 56.8104 |

|---|

| close | 65.1512 | 65.5219 | 64.9659 | 63.5757 | 62.927 | 61.9076 | 59.6833 |

|---|

# 按轴进行排序

df.sort_index(axis=1, ascending=False)

| date | open | low | instrument | high | close |

|---|

| 2017-01-06 | 64.502495 | 64.224464 | 000005.SZA | 66.077988 | 65.151230 |

|---|

| 2017-01-09 | 64.502495 | 64.409821 | 000005.SZA | 65.892639 | 65.521935 |

|---|

| 2017-01-10 | 65.521935 | 64.965874 | 000005.SZA | 65.614609 | 64.965874 |

|---|

| 2017-01-11 | 64.965874 | 63.575737 | 000005.SZA | 64.965874 | 63.575737 |

|---|

| 2017-01-12 | 63.205032 | 62.741653 | 000005.SZA | 63.761089 | 62.927006 |

|---|

| 2017-01-13 | 62.927006 | 61.907570 | 000005.SZA | 63.205032 | 61.907570 |

|---|

| 2017-01-16 | 62.185596 | 56.810387 | 000005.SZA | 62.278271 | 59.683346 |

|---|

# 按值进行排序

df.sort_values(by='close',ascending=False) # ascending默认升序,ascending=False降序

| date | instrument | open | high | low | close |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-06 | 000005.SZA | 64.502495 | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.205032 | 63.761089 | 62.741653 | 62.927006 |

|---|

| 2017-01-13 | 000005.SZA | 62.927006 | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.185596 | 62.278271 | 56.810387 | 59.683346 |

|---|

shift数据偏移(可理解为回溯)

# 利用pct_change计算每日收益

df = D.history_data(['000001.SZA'],'2010-01-01','2016-12-31',fields=['close'],groupped_by_instrument=False).set_index('date')['close']

rets = df.pct_change()

rets.head()

date

2010-01-04 NaN

2010-01-05 -0.017292

2010-01-06 -0.017167

2010-01-07 -0.010917

2010-01-08 -0.002208

Name: close, dtype: float32

# 计算每日收益,等价于pct_change函数

daily_returns = df / df.shift(1) -1 # 正数表示向前偏移,负数表示向后偏移

daily_returns.head()

date

2010-01-04 NaN

2010-01-05 -0.017292

2010-01-06 -0.017167

2010-01-07 -0.010917

2010-01-08 -0.002208

Name: close, dtype: float32

数据选择

选取

df = D.history_data(['000005.SZA'],start_date='2017-01-06',end_date='2017-02-10',

fields=['open','high','low','close'], groupped_by_instrument=False)

df = df.set_index('date')

# 选择某一列数据,它会返回一个Series,等同于df.close:

df['close']

date

2017-01-06 65.151230

2017-01-09 65.521935

2017-01-10 64.965874

2017-01-11 63.575737

2017-01-12 62.927006

2017-01-13 61.907570

2017-01-16 59.683346

2017-01-17 60.054047

2017-01-18 60.702782

2017-01-19 59.683346

2017-01-20 60.239399

2017-01-23 60.517429

2017-01-24 60.332077

2017-01-25 60.424751

2017-01-26 60.795456

2017-02-03 60.517429

2017-02-06 60.795456

2017-02-07 61.166161

2017-02-08 61.073483

2017-02-09 61.444187

2017-02-10 61.536865

Name: close, dtype: float32

# 获取2017-02-06到2017-02-09的所有数据

df['20170206':'20170209']

| date | instrument | open | high | low | close |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 |

|---|

# 获取2017-02-06的所有数据

df.ix['2017-02-06':'2017-02-06',

| date | instrument | open | high | low | close |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 |

|---|

# 获取2017-02-06的开盘价和收盘价数据

df.ix['2017-02-06':'2017-02-06',['open','close']]

| date | open | close |

|---|

| 2017-02-06 | 60.517429 | 60.795456 |

|---|

# 获取2017-02-06和2017-02-09间断两天的开盘价和收盘价数据

df.ix['2017-02-06':'2017-02-09',['open','close']]

| date | open | close |

|---|

| 2017-02-06 | 60.517429 | 60.795456 |

|---|

| 2017-02-07 | 60.795456 | 61.166161 |

|---|

| 2017-02-08 | 60.980808 | 61.073483 |

|---|

| 2017-02-09 | 61.073483 | 61.444187 |

|---|

# 获取2017-02-06的收盘价,返回DataFrame

df.ix['2017-02-06':'2017-02-06',['open']]

| date | open |

|---|

| 2017-02-06 | 60.517429 |

|---|

# 获取2017-02-06的收盘价,返回标量

df.ix['2017-02-06','open']

60.517429

布尔索引

# 获取最高价大于64的交易日数据

df[df.high > 64]

| date | instrument | open | high | low | close |

|---|

| 2017-01-06 | 000005.SZA | 64.502495 | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-18 | 000005.SZA | 60.888130 | 64.687851 | 60.517429 | 60.702782 |

|---|

where 索引

# 选出大于60的元素

df[df > 60]

| date | instrument | open | high | low | close |

|---|

| 2017-01-06 | 000005.SZA | 64.502495 | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.205032 | 63.761089 | 62.741653 | 62.927006 |

|---|

| 2017-01-13 | 000005.SZA | 62.927006 | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.185596 | 62.278271 | NaN | NaN |

|---|

| 2017-01-17 | 000005.SZA | NaN | 60.424751 | NaN | 60.054047 |

|---|

| 2017-01-18 | 000005.SZA | 60.888130 | 64.687851 | 60.517429 | 60.702782 |

|---|

| 2017-01-19 | 000005.SZA | NaN | 60.980808 | NaN | NaN |

|---|

| 2017-01-20 | 000005.SZA | 60.239399 | 60.610104 | NaN | 60.239399 |

|---|

| 2017-01-23 | 000005.SZA | 60.332077 | 60.888130 | 60.146725 | 60.517429 |

|---|

| 2017-01-24 | 000005.SZA | 60.610104 | 60.610104 | 60.239399 | 60.332077 |

|---|

| 2017-01-25 | 000005.SZA | 60.424751 | 60.517429 | NaN | 60.424751 |

|---|

| 2017-01-26 | 000005.SZA | 60.517429 | 60.795456 | 60.424751 | 60.795456 |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.888130 | 60.239399 | 60.517429 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 |

|---|

丢弃数据

# 丢弃行

df.drop([pd.Timestamp('2017-01-06')]) # 丢弃行

| date | instrument | open | high | low | close |

|---|

| 2017-01-09 | 000005.SZA | 64.502495 | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.521935 | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.205032 | 63.761089 | 62.741653 | 62.927006 |

|---|

| 2017-01-13 | 000005.SZA | 62.927006 | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.185596 | 62.278271 | 56.810387 | 59.683346 |

|---|

| 2017-01-17 | 000005.SZA | 59.497993 | 60.424751 | 58.385880 | 60.054047 |

|---|

| 2017-01-18 | 000005.SZA | 60.888130 | 64.687851 | 60.517429 | 60.702782 |

|---|

| 2017-01-19 | 000005.SZA | 59.776020 | 60.980808 | 59.497993 | 59.683346 |

|---|

| 2017-01-20 | 000005.SZA | 60.239399 | 60.610104 | 59.776020 | 60.239399 |

|---|

| 2017-01-23 | 000005.SZA | 60.332077 | 60.888130 | 60.146725 | 60.517429 |

|---|

| 2017-01-24 | 000005.SZA | 60.610104 | 60.610104 | 60.239399 | 60.332077 |

|---|

| 2017-01-25 | 000005.SZA | 60.424751 | 60.517429 | 59.961372 | 60.424751 |

|---|

| 2017-01-26 | 000005.SZA | 60.517429 | 60.795456 | 60.424751 | 60.795456 |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.888130 | 60.239399 | 60.517429 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 |

|---|

# 丢弃列 ,del df['open'],原地修改

df.drop('open',axis=1) # 非原地修改

| date | instrument | high | low | close |

|---|

| 2017-01-06 | 000005.SZA | 66.077988 | 64.224464 | 65.151230 |

|---|

| 2017-01-09 | 000005.SZA | 65.892639 | 64.409821 | 65.521935 |

|---|

| 2017-01-10 | 000005.SZA | 65.614609 | 64.965874 | 64.965874 |

|---|

| 2017-01-11 | 000005.SZA | 64.965874 | 63.575737 | 63.575737 |

|---|

| 2017-01-12 | 000005.SZA | 63.761089 | 62.741653 | 62.927006 |

|---|

| 2017-01-13 | 000005.SZA | 63.205032 | 61.907570 | 61.907570 |

|---|

| 2017-01-16 | 000005.SZA | 62.278271 | 56.810387 | 59.683346 |

|---|

| 2017-01-17 | 000005.SZA | 60.424751 | 58.385880 | 60.054047 |

|---|

| 2017-01-18 | 000005.SZA | 64.687851 | 60.517429 | 60.702782 |

|---|

| 2017-01-19 | 000005.SZA | 60.980808 | 59.497993 | 59.683346 |

|---|

| 2017-01-20 | 000005.SZA | 60.610104 | 59.776020 | 60.239399 |

|---|

| 2017-01-23 | 000005.SZA | 60.888130 | 60.146725 | 60.517429 |

|---|

| 2017-01-24 | 000005.SZA | 60.610104 | 60.239399 | 60.332077 |

|---|

| 2017-01-25 | 000005.SZA | 60.517429 | 59.961372 | 60.424751 |

|---|

| 2017-01-26 | 000005.SZA | 60.795456 | 60.424751 | 60.795456 |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.239399 | 60.517429 |

|---|

| 2017-02-06 | 000005.SZA | 60.795456 | 60.146725 | 60.795456 |

|---|

| 2017-02-07 | 000005.SZA | 61.629539 | 60.424751 | 61.166161 |

|---|

| 2017-02-08 | 000005.SZA | 61.166161 | 60.424751 | 61.073483 |

|---|

| 2017-02-09 | 000005.SZA | 61.536865 | 60.888130 | 61.444187 |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.258835 | 61.536865 |

|---|

对齐、合并、赋值

# 通过索引,自动对齐两个DataFrame的数据

df1 = D.history_data(['000005.SZA'],start_date='2017-02-01',end_date='2017-02-10',

fields=['open','high','low','close'], groupped_by_instrument=False).set_index('date')

df2 = D.history_data(['000005.SZA'],start_date='2017-01-01',end_date='2017-02-10',

fields=['market_cap','pe_ttm'], groupped_by_instrument=False).set_index('date')

# 取索引的交集进行合并

df1.merge(df2,left_index=True,right_index=True,how='inner')

| date | instrument_x | open | high | low | close | instrument_y | market_cap | pe_ttm |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.888130 | 60.239399 | 60.517429 | 000005.SZA | 6.912246e+09 | 63.786068 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | 000005.SZA | 6.944002e+09 | 64.079109 |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 | 000005.SZA | 6.986343e+09 | 64.469841 |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | 000005.SZA | 6.975758e+09 | 64.372154 |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 | 000005.SZA | 7.018099e+09 | 64.762886 |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 | 000005.SZA | 7.028685e+09 | 64.860565 |

|---|

# 取索引的并集进行合并

df1.merge(df2,left_index=True,right_index=True,how='outer')

| date | instrument_x | open | high | low | close | instrument_y | market_cap | pe_ttm |

|---|

| 2017-01-03 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.229807e+09 | 66.716515 |

|---|

| 2017-01-04 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.420343e+09 | 68.474785 |

|---|

| 2017-01-05 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.378002e+09 | 68.084053 |

|---|

| 2017-01-06 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.441514e+09 | 68.670143 |

|---|

| 2017-01-09 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.483855e+09 | 69.060875 |

|---|

| 2017-01-10 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.420343e+09 | 68.474785 |

|---|

| 2017-01-11 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.261563e+09 | 67.009560 |

|---|

| 2017-01-12 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.187465e+09 | 66.325790 |

|---|

| 2017-01-13 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 7.071026e+09 | 65.251289 |

|---|

| 2017-01-16 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.816977e+09 | 62.906933 |

|---|

| 2017-01-17 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.859319e+09 | 63.297661 |

|---|

| 2017-01-18 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.933416e+09 | 63.981430 |

|---|

| 2017-01-19 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.816977e+09 | 62.906933 |

|---|

| 2017-01-20 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.880489e+09 | 63.493023 |

|---|

| 2017-01-23 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.912246e+09 | 63.786068 |

|---|

| 2017-01-24 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.891075e+09 | 63.590702 |

|---|

| 2017-01-25 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.901660e+09 | 63.688385 |

|---|

| 2017-01-26 | NaN | NaN | NaN | NaN | NaN | 000005.SZA | 6.944002e+09 | 64.079109 |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.888130 | 60.239399 | 60.517429 | 000005.SZA | 6.912246e+09 | 63.786068 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | 000005.SZA | 6.944002e+09 | 64.079109 |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 | 000005.SZA | 6.986343e+09 | 64.469841 |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | 000005.SZA | 6.975758e+09 | 64.372154 |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 | 000005.SZA | 7.018099e+09 | 64.762886 |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 | 000005.SZA | 7.028685e+09 | 64.860565 |

|---|

# 通过标签赋值

df1.ix['2017-02-03','market_cap'] = 0

df1

| date | instrument | open | high | low | close | market_cap |

|---|

| 2017-02-03 | 000005.SZA | 60.888130 | 60.888130 | 60.239399 | 60.517429 | 0.0 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | NaN |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 | NaN |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | NaN |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 | NaN |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 | NaN |

|---|

# 通过位置赋值

df1.iat[0,0] ='600519.SHA'

df1

| date | instrument | open | high | low | close | market_cap |

|---|

| 2017-02-03 | 600519.SHA | 60.888130 | 60.888130 | 60.239399 | 60.517429 | 0.0 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | NaN |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 | NaN |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | NaN |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 | NaN |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 | NaN |

|---|

# 通过where操作来赋值

df1[df1['market_cap'] == 0] = 111

df1.head(4)

| date | instrument | open | high | low | close | market_cap |

|---|

| 2017-02-03 | 111 | 111.000000 | 111.000000 | 111.000000 | 111.000000 | 111.0 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | NaN |

|---|

| 2017-02-07 | 000005.SZA | 60.795456 | 61.629539 | 60.424751 | 61.166161 | NaN |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | NaN |

|---|

缺失值处理

在pandas中,用np.nan来代表缺失值,这些值默认不会参与运算。

# 通过set_index设置某一列为索引

df2 = D.history_data(['000005.SZA'],'2016-11-15','2016-12-01',fields=['open','high','low','close'],

groupped_by_instrument=False).set_index('date')

df2

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

| 2016-11-22 | 000005.SZA | 69.970383 | 70.619110 | 69.692352 | 70.341087 |

|---|

| 2016-11-23 | 000005.SZA | 70.433762 | 70.897141 | 69.785027 | 70.526436 |

|---|

| 2016-11-24 | 000005.SZA | 70.155731 | 70.619110 | 69.507004 | 69.970383 |

|---|

| 2016-11-25 | 000005.SZA | 69.970383 | 70.433762 | 68.672913 | 70.063057 |

|---|

| 2016-11-28 | 000005.SZA | 70.433762 | 71.638550 | 69.785027 | 70.711784 |

|---|

| 2016-11-29 | 000005.SZA | 70.433762 | 71.082489 | 69.228973 | 69.321648 |

|---|

| 2016-11-30 | 000005.SZA | 69.043617 | 69.599678 | 68.580238 | 68.765594 |

|---|

| 2016-12-01 | 000005.SZA | 68.950943 | 69.321648 | 68.672913 | 69.043617 |

|---|

# 通过resset_index来将DataFrame的索引转化为列

df2.reset_index()

| date | instrument | open | high | low | close |

|---|

| 0 | 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 1 | 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2 | 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 3 | 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 4 | 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

| 5 | 2016-11-22 | 000005.SZA | 69.970383 | 70.619110 | 69.692352 | 70.341087 |

|---|

| 6 | 2016-11-23 | 000005.SZA | 70.433762 | 70.897141 | 69.785027 | 70.526436 |

|---|

| 7 | 2016-11-24 | 000005.SZA | 70.155731 | 70.619110 | 69.507004 | 69.970383 |

|---|

| 8 | 2016-11-25 | 000005.SZA | 69.970383 | 70.433762 | 68.672913 | 70.063057 |

|---|

| 9 | 2016-11-28 | 000005.SZA | 70.433762 | 71.638550 | 69.785027 | 70.711784 |

|---|

| 10 | 2016-11-29 | 000005.SZA | 70.433762 | 71.082489 | 69.228973 | 69.321648 |

|---|

| 11 | 2016-11-30 | 000005.SZA | 69.043617 | 69.599678 | 68.580238 | 68.765594 |

|---|

| 12 | 2016-12-01 | 000005.SZA | 68.950943 | 69.321648 | 68.672913 | 69.043617 |

|---|

# 将2016-11-22以后的数据用缺失值代替

df2.ix['2016-11-22':,:] = np.nan

df2

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

| 2016-11-22 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-23 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-24 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-25 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-28 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-29 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-30 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-12-01 | NaN | NaN | NaN | NaN | NaN |

|---|

# 剔除所有包含缺失值的行数据

df2.dropna(how='any')

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

# 填充缺失值

df2.fillna(value=154)

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

| 2016-11-22 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-23 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-24 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-25 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-28 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-29 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-11-30 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

| 2016-12-01 | 154 | 154.000000 | 154.000000 | 154.000000 | 154.000000 |

|---|

# 获取值是否为nan的布尔标记

pd.isnull(df2)

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | False | False | False | False | False |

|---|

| 2016-11-16 | False | False | False | False | False |

|---|

| 2016-11-17 | False | False | False | False | False |

|---|

| 2016-11-18 | False | False | False | False | False |

|---|

| 2016-11-21 | False | False | False | False | False |

|---|

| 2016-11-22 | True | True | True | True | True |

|---|

| 2016-11-23 | True | True | True | True | True |

|---|

| 2016-11-24 | True | True | True | True | True |

|---|

| 2016-11-25 | True | True | True | True | True |

|---|

| 2016-11-28 | True | True | True | True | True |

|---|

| 2016-11-29 | True | True | True | True | True |

|---|

| 2016-11-30 | True | True | True | True | True |

|---|

| 2016-12-01 | True | True | True | True | True |

|---|

运算

# describe()能对数据做一个快速统计汇总

df2.describe()

| open | high | low | close |

|---|

| count | 5.000000 | 5.000000 | 5.000000 | 5.000000 |

|---|

| mean | 70.211342 | 70.693253 | 69.488464 | 70.118668 |

|---|

| std | 0.647406 | 0.521793 | 0.265382 | 0.250397 |

|---|

| min | 69.785027 | 70.155731 | 69.136299 | 69.877701 |

|---|

| 25% | 69.970383 | 70.433762 | 69.321648 | 69.970383 |

|---|

| 50% | 69.970383 | 70.619110 | 69.507004 | 70.063057 |

|---|

| 75% | 69.970383 | 70.711784 | 69.692352 | 70.155731 |

|---|

| max | 71.360519 | 71.545876 | 69.785027 | 70.526436 |

|---|

运算过程中,通常不包含缺失值。

# 按列计算平均值

df2.mean()

open 70.211342

high 70.693253

low 69.488464

close 70.118668

dtype: float32

# 按行计算平均值

df2.mean(1)

date

2016-11-15 70.109398

2016-11-16 70.711792

2016-11-17 69.808197

2016-11-18 69.947212

2016-11-21 70.063049

2016-11-22 NaN

2016-11-23 NaN

2016-11-24 NaN

2016-11-25 NaN

2016-11-28 NaN

2016-11-29 NaN

2016-11-30 NaN

2016-12-01 NaN

dtype: float32

# pandas会按照指定的索引对齐

dates = ['2016-11-15','2016-11-16','2016-11-17','2016-11-18','2016-11-23']

for i in range(len(dates)):

dates[i] = pd.Timestamp(dates[i])

pd.DataFrame(df2,index=dates)

| instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-17 | 000005.SZA | 69.970383 | 70.155731 | 69.136299 | 69.970383 |

|---|

| 2016-11-18 | 000005.SZA | 69.970383 | 70.433762 | 69.321648 | 70.063057 |

|---|

| 2016-11-23 | NaN | NaN | NaN | NaN | NaN |

|---|

Apply 函数

# 通过apply函数求每个交易日高开低收价格的平均值

df3 = df2[['open','high','low','close']]

df3.apply(np.mean,axis=1)

date

2016-11-15 70.109398

2016-11-16 70.711792

2016-11-17 69.808197

2016-11-18 69.947212

2016-11-21 70.063049

2016-11-22 NaN

2016-11-23 NaN

2016-11-24 NaN

2016-11-25 NaN

2016-11-28 NaN

2016-11-29 NaN

2016-11-30 NaN

2016-12-01 NaN

dtype: float64

# 计算每日股价振幅,振幅=最高价-最低价

df3.apply(lambda x:x.max()-x.min())

open 1.575493

high 1.390144

low 0.648727

close 0.648735

dtype: float64

# 通过apply对各个元素调用字符串方法,比如将股票代码的后缀改成小写

temp = df2.ix['2016-11-15':'2016-11-21']

temp['instrument'].apply(lambda x : x.lower())

date

2016-11-15 000005.sza

2016-11-16 000005.sza

2016-11-17 000005.sza

2016-11-18 000005.sza

2016-11-21 000005.sza

Name: instrument,

dtype: object

rolling_apply的使用

# 获取数据

data = D.history_data(['000001.SZA'], '2016-01-01', '2016-10-01',

fields=['close'],

groupped_by_instrument=False).set_index('date')

# 计算5日均线和20日均线

data['MA_' + str(5)] = pd.rolling_apply(data['close'], 5,np.mean)

data['MA_' + str(20)] = pd.rolling_apply(data['close'], 20,np.mean)

data.head(12)

| instrument | close | MA_5 | MA_20 |

|---|

| date | | | | |

|---|

| 2016-01-04 | 000001.SZA | 974.312988 | NaN | NaN |

|---|

| 2016-01-05 | 000001.SZA | 980.332520 | NaN | NaN |

|---|

| 2016-01-06 | 000001.SZA | 991.511780 | NaN | NaN |

|---|

| 2016-01-07 | 000001.SZA | 940.775269 | NaN | NaN |

|---|

| 2016-01-08 | 000001.SZA | 956.254211 | 968.637354 | NaN |

|---|

| 2016-01-11 | 000001.SZA | 925.296326 | 958.834021 | NaN |

|---|

| 2016-01-12 | 000001.SZA | 929.596008 | 948.686719 | NaN |

|---|

| 2016-01-13 | 000001.SZA | 920.996643 | 934.583691 | NaN |

|---|

| 2016-01-14 | 000001.SZA | 926.156250 | 931.659888 | NaN |

|---|

| 2016-01-15 | 000001.SZA | 899.498108 | 920.308667 | NaN |

|---|

| 2016-01-18 | 000001.SZA | 895.198425 | 914.289087 | NaN |

|---|

| 2016-01-19 | 000001.SZA | 920.996643 | 912.569214 | NaN |

|---|

合并

Concat 连接

# 通过concat()来连接pandas对象

a = df2.ix['2016-11-15':'2016-11-16']

b = df2.ix['2016-11-20':'2016-11-26']

pd.concat([a,b])

| date | instrument | open | high | low | close |

|---|

| 2016-11-15 | 000005.SZA | 69.785027 | 70.619110 | 69.507004 | 70.526436 |

|---|

| 2016-11-16 | 000005.SZA | 71.360519 | 71.545876 | 69.785027 | 70.155731 |

|---|

| 2016-11-21 | 000005.SZA | 69.970383 | 70.711784 | 69.692352 | 69.877701 |

|---|

| 2016-11-22 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-23 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-24 | NaN | NaN | NaN | NaN | NaN |

|---|

| 2016-11-25 | NaN | NaN | NaN | NaN | NaN |

|---|

Join 合并

# 合并,和merge功能类似

df5 = D.history_data(['000005.SZA'],start_date='2017-02-01',end_date='2017-02-10',

fields=['open','high','low','close'], groupped_by_instrument=False).set_index('date')

df6 = D.history_data(['000005.SZA'],start_date='2017-01-01',end_date='2017-02-10',

fields=['market_cap','pe_ttm'], groupped_by_instrument=False).set_index('date')

# 删除股票代码列

del df5['instrument']

del df6['instrument']

# 按日期轴合并数据

df5.join(df6)

| date | open | high | low | close | market_cap | pe_ttm |

|---|

| 2017-02-03 | 60.888130 | 60.888130 | 60.239399 | 60.517429 | 6.912246e+09 | 63.786068 |

|---|

| 2017-02-06 | 60.517429 | 60.795456 | 60.146725 | 60.795456 | 6.944002e+09 | 64.079109 |

|---|

| 2017-02-07 | 60.795456 | 61.629539 | 60.424751 | 61.166161 | 6.986343e+09 | 64.469841 |

|---|

| 2017-02-08 | 60.980808 | 61.166161 | 60.424751 | 61.073483 | 6.975758e+09 | 64.372154 |

|---|

| 2017-02-09 | 61.073483 | 61.536865 | 60.888130 | 61.444187 | 7.018099e+09 | 64.762886 |

|---|

| 2017-02-10 | 61.629539 | 61.629539 | 61.258835 | 61.536865 | 7.028685e+09 | 64.860565 |

|---|

Append 添加

# 通过append方法,将若干行添加到dataFrame后面

c = df1.ix['2017-02-03':'2017-02-06']

d = df1.ix['2017-02-08':'2017-02-10']

c.append(d)

| date | instrument | open | high | low | close | market_cap |

|---|

| 2017-02-03 | 111 | 111.000000 | 111.000000 | 111.000000 | 111.000000 | 111.0 |

|---|

| 2017-02-06 | 000005.SZA | 60.517429 | 60.795456 | 60.146725 | 60.795456 | NaN |

|---|

| 2017-02-08 | 000005.SZA | 60.980808 | 61.166161 | 60.424751 | 61.073483 | NaN |

|---|

| 2017-02-09 | 000005.SZA | 61.073483 | 61.536865 | 60.888130 | 61.444187 | NaN |

|---|

| 2017-02-10 | 000005.SZA | 61.629539 | 61.629539 | 61.258835 | 61.536865 | NaN |

|---|

数据透视表

也许大多数人都有在Excel中使用数据透视表的经历,其实Pandas也提供了一个类似的功能,名为pivot_table

df = pd.DataFrame({'A' : ['one', 'one', 'two', 'three'] * 3,

'B' : ['A', 'B', 'C'] * 4,

'C' : ['foo', 'foo', 'foo', 'bar', 'bar', 'bar'] * 2,

'D' : np.random.randn(12),

'E' : np.random.randn(12)})

df

| A | B | C | D | E |

|---|

| 0 | one | A | foo | -2.216199 | 0.288395 |

|---|

| 1 | one | B | foo | 1.572496 | 0.159725 |

|---|

| 2 | two | C | foo | -0.559229 | 0.204685 |

|---|

| 3 | three | A | bar | 2.044115 | -0.032094 |

|---|

| 4 | one | B | bar | 1.022778 | 1.650399 |

|---|

| 5 | one | C | bar | -0.558943 | 1.074547 |

|---|

| 6 | two | A | foo | -0.147329 | 0.150598 |

|---|

| 7 | three | B | foo | 0.659760 | 1.779248 |

|---|

| 8 | one | C | foo | 0.506593 | -0.343586 |

|---|

| 9 | one | A | bar | 0.941787 | -0.277070 |

|---|

| 10 | two | B | bar | 1.662566 | -0.558867 |

|---|

| 11 | three | C | bar | -1.671826 | -0.939832 |

|---|

# 我们可以轻松得到数据透视表

pd.pivot_table(df, values='D', index=['A', 'B'], columns=['C'])

| C | bar | foo |

|---|

| A | B | | |

|---|

| one | A | 0.941787 | -2.216199 |

|---|

| B | 1.022778 | 1.572496 |

|---|

| C | -0.558943 | 0.506593 |

|---|

| three | A | 2.044115 | NaN |

|---|

| B | NaN | 0.659760 |

|---|

| C | -1.671826 | NaN |

|---|

| two | A | NaN | -0.147329 |

|---|

| B | 1.662566 | NaN |

|---|

| C | NaN | -0.559229 |

|---|

# 查看每个交易日多只股票的收盘价数据(每列为股票)

df = D.history_data(['000001.SZA','000002.SZA'],'2016-12-15','2017-01-01',fields=['close'],groupped_by_instrument=False)

pd.pivot_table(df,values='close',index=['date'],columns=['instrument'])

| instrument | 000001.SZA | 000002.SZA |

|---|

| date | | |

|---|

| 2016-12-15 | 969.013855 | 2965.032471 |

|---|

| 2016-12-16 | 969.013855 | 2982.294189 |

|---|

| 2016-12-19 | 963.775940 | 2801.710205 |

|---|

| 2016-12-20 | 954.347656 | 2699.467529 |

|---|

| 2016-12-21 | 959.585571 | 2719.385010 |

|---|

| 2016-12-22 | 957.490417 | 2736.646729 |

|---|

| 2016-12-23 | 951.204956 | 2695.484131 |

|---|

| 2016-12-26 | 955.395264 | 2741.958008 |

|---|

| 2016-12-27 | 951.204956 | 2844.200439 |

|---|

| 2016-12-28 | 949.109802 | 2814.988281 |

|---|

| 2016-12-29 | 951.204956 | 2767.186768 |

|---|

| 2016-12-30 | 953.300110 | 2728.679688 |

|---|

# 查看不同股票每个交易日的收盘价数据(每列为交易日)

pd.pivot_table(df,values = 'close',index=['instrument'],columns=['date'])

| date | 2016-12-15 00 :00 :00 | 2016-12-16 00 :00 :00 | 2016-12-19 00 :00 :00 | 2016-12-20 00 :00 :00 | 2016-12-21 00 :00 :00 | 2016-12-22 00 :00 :00 | 2016-12-23 00 :00 :00 | 2016-12-26 00 :00 :00 | 2016-12-27 00 :00 :00 | 2016-12-28 00 :00 :00 | 2016-12-29 00 :00 :00 | 2016-12-30 00 :00 :00 |

|---|

| instrument | | | | | | | | | | | | |

|---|

| 000001.SZA | 969.013855 | 969.013855 | 963.775940 | 954.347656 | 959.585571 | 957.490417 | 951.204956 | 955.395264 | 951.204956 | 949.109802 | 951.204956 | 953.300110 |

|---|

| 000002.SZA | 2965.032471 | 2982.294189 | 2801.710205 | 2699.467529 | 2719.385010 | 2736.646729 | 2695.484131 | 2741.958008 | 2844.200439 | 2814.988281 | 2767.186768 | 2728.679688 |

|---|

分组

对于“group by”操作,我们通常是指以下一个或几个步骤: * 划分 按照某些标准将数据分为不同的组 * 应用 对每组数据分别执行一个函数 * 组合 将结果组合到一个数据结构

df = pd.DataFrame({'A' : ['foo', 'bar', 'foo', 'bar',

'foo', 'bar', 'foo', 'bar'],

'B' : ['one', 'one', 'two', 'three',

'two', 'two', 'one', 'three'],

'C' : np.random.randn(8),

'D' : np.random.randn(8)})

df

| A | B | C | D |

|---|

| 0 | foo | one | -0.160349 | -0.086323 |

|---|

| 1 | bar | one | -0.994189 | -1.444457 |

|---|

| 2 | foo | two | -0.095365 | 0.227690 |

|---|

| 3 | bar | three | 1.502585 | -0.286937 |

|---|

| 4 | foo | two | -0.258329 | -0.123057 |

|---|

| 5 | bar | two | 0.944706 | -0.211243 |

|---|

| 6 | foo | one | 1.542726 | -0.164639 |

|---|

| 7 | bar | three | 0.874764 | -0.075149 |

|---|

# 分组并对每个分组应用sum函数

df.groupby('A').sum()

| A | C | D |

|---|

| bar | 2.327866 | -2.017786 |

|---|

| foo | 1.028683 | -0.146328 |

|---|

# 按多个列分组形成层级索引,然后应用函数

df.groupby(['A','B']).sum()

| A | B | C | D |

|---|

| bar | one | -0.994189 | -1.444457 |

|---|

| three | 2.377349 | -0.362086 |

|---|

| two | 0.944706 | -0.211243 |

|---|

| foo | one | 1.382377 | -0.250962 |

|---|

| two | -0.353694 | 0.104634 |

|---|

# 通过groupby函数来计算各股票和大盘指数的相关系数(按年划分)

# 获取数据

stocks = ['000005.SZA','000010.SZA','603998.SHA','603898.SHA','000300.SHA']

df = D.history_data(stocks,'2010-01-01','2016-12-31',fields=['close'],groupped_by_instrument=False)

# 每列转化为每日多只股票的收盘价数据

df = pd.pivot_table(df,values='close',index=['date'],columns=['instrument'])

# 计算日收益率

rets = df.pct_change().dropna()

# 定义求相关系数的函数

corr_func = lambda x:x.corrwith(x['000300.SHA'])

# 求每只股票和大盘相关性

by_year = rets.groupby(lambda x:x.year)

by_year.apply(corr_func)

| instrument | 000005.SZA | 000010.SZA | 000300.SHA | 603898.SHA | 603998.SHA |

|---|

| 2015 | 0.442371 | 0.464152 | 1.0 | 0.189308 | 0.540383 |

|---|

| 2016 | 0.714335 | 0.649836 | 1.0 | 0.634643 | 0.623916 |

|---|

时间序列

pandas在对频率转换进行重新采样时拥有着简单,强大而且高效的功能(例如把按秒采样的数据转换为按5分钟采样的数据)。这在金融领域很常见,但又不限于此。

# 按秒产生5000个时间、起始时间是2017-01-01的时间序列

rng = pd.date_range('1/1/2017', periods=5000, freq='S')

rng

DatetimeIndex(['2017-01-01 00 :00 :00 ', '2017-01-01 00 :00 :01 ',

'2017-01-01 00 :00 :02 ', '2017-01-01 00 :00 :03 ',

'2017-01-01 00 :00 :04 ', '2017-01-01 00 :00 :05 ',

'2017-01-01 00 :00 :06 ', '2017-01-01 00 :00 :07 ',

'2017-01-01 00 :00 :08 ', '2017-01-01 00 :00 :09 ',

...

'2017-01-01 01 :23 :10 ', '2017-01-01 01 :23 :11 ',

'2017-01-01 01 :23 :12 ', '2017-01-01 01 :23 :13 ',

'2017-01-01 01 :23 :14 ', '2017-01-01 01 :23 :15 ',

'2017-01-01 01 :23 :16 ', '2017-01-01 01 :23 :17 ',

'2017-01-01 01 :23 :18 ', '2017-01-01 01 :23 :19 '],

dtype='datetime64[ns]', length=5000, freq='S')

# 重采样,计算每五分钟的总和

ts = pd.Series(np.random.randint(0,5000,len(rng)), index=rng)

ts.resample('5Min', how='sum')

2017-01-01 00:00:00 765477

2017-01-01 00:05:00 755782

2017-01-01 00:10:00 759474

2017-01-01 00:15:00 738611

2017-01-01 00:20:00 782165

2017-01-01 00:25:00 741331

2017-01-01 00:30:00 771220

2017-01-01 00:35:00 790782

2017-01-01 00:40:00 739392

2017-01-01 00:45:00 800815

2017-01-01 00:50:00 725954

2017-01-01 00:55:00 725840

2017-01-01 01:00:00 739548

2017-01-01 01:05:00 756409

2017-01-01 01:10:00 727530

2017-01-01 01:15:00 776613

2017-01-01 01:20:00 522169

Freq: 5T, dtype: int64

# 频率转化,将日K线数据装换成周K

df = D.history_data(['000001.SZA'],'2016-11-01','2016-12-31',fields=['close'],groupped_by_instrument=False).set_index('date')[['close']]

df.resample('W',how='ohlc')

| close |

|---|

| date | open | high | low | close |

|---|

| 2016-11-06 | 957.490417 | 957.490417 | 950.157349 | 954.347656 |

|---|

| 2016-11-13 | 955.395264 | 961.680786 | 950.157349 | 961.680786 |

|---|

| 2016-11-20 | 965.871094 | 966.918701 | 961.680786 | 961.680786 |

|---|

| 2016-11-27 | 967.966248 | 1007.774414 | 967.966248 | 1007.774414 |

|---|

| 2016-12-04 | 1008.821960 | 1008.821960 | 1000.441345 | 1000.441345 |

|---|

| 2016-12-11 | 991.013062 | 1010.917114 | 991.013062 | 1010.917114 |

|---|

| 2016-12-18 | 995.203430 | 995.203430 | 969.013855 | 969.013855 |

|---|

| 2016-12-25 | 963.775940 | 963.775940 | 951.204956 | 951.204956 |

|---|

| 2017-01-01 | 955.395264 | 955.395264 | 949.109802 | 953.300110 |

|---|

绘图

数据可视化:可交互图表 (文档: 数据可视化)

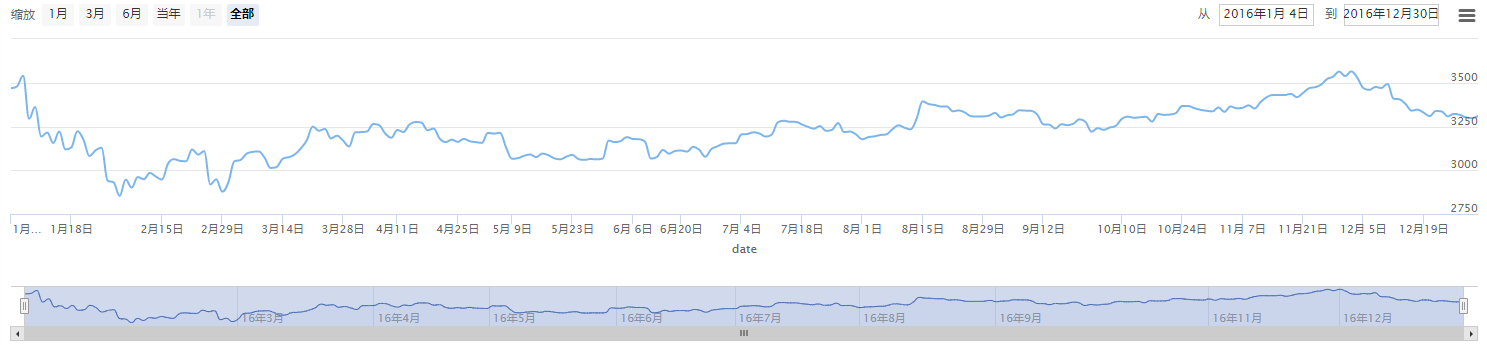

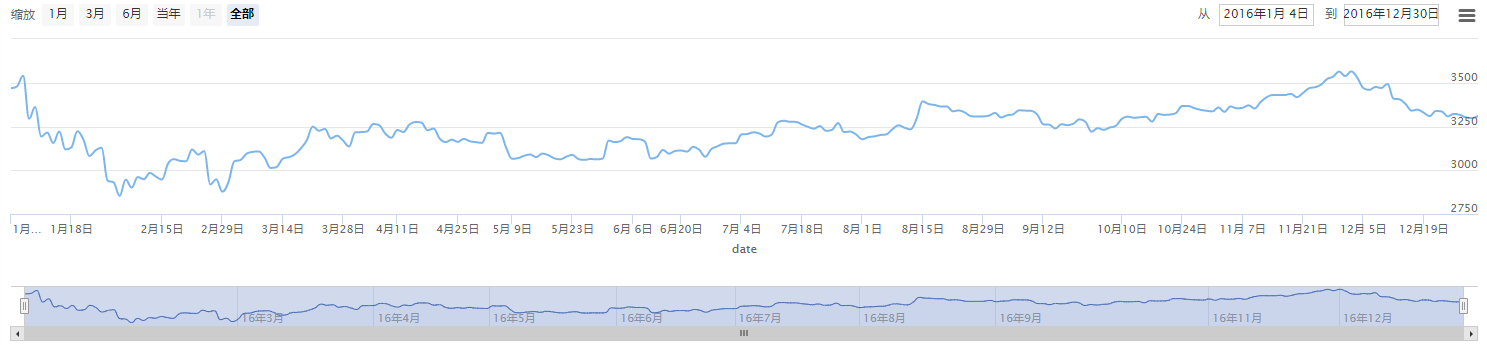

# 绘制沪深300走势图

history_hs300 = D.history_data(['000300.SHA'], start_date='2016-01-01', end_date='2017-01-01', fields=['close'])

T.plot(history_hs300[['date', 'close']].set_index('date'), chart_type='spline')

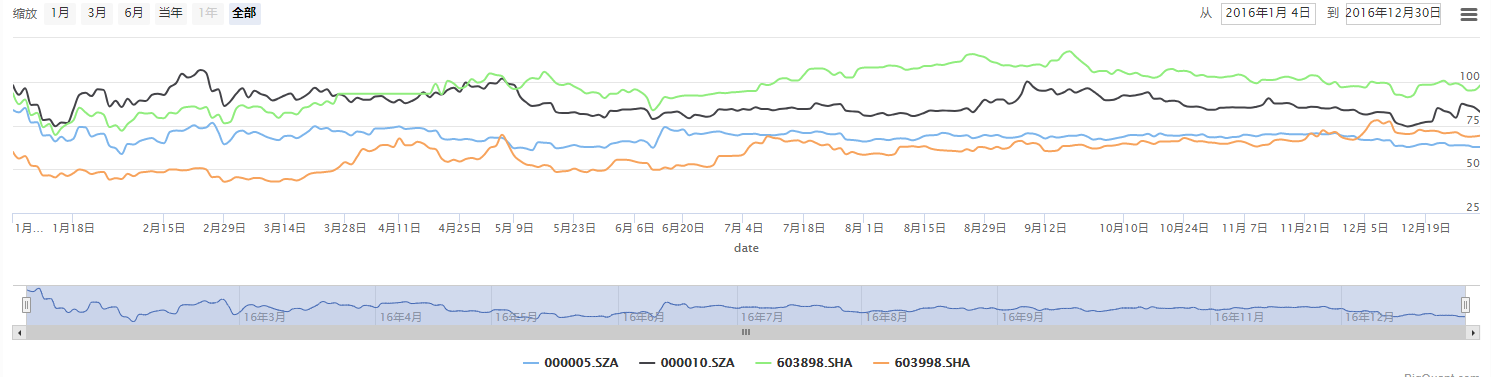

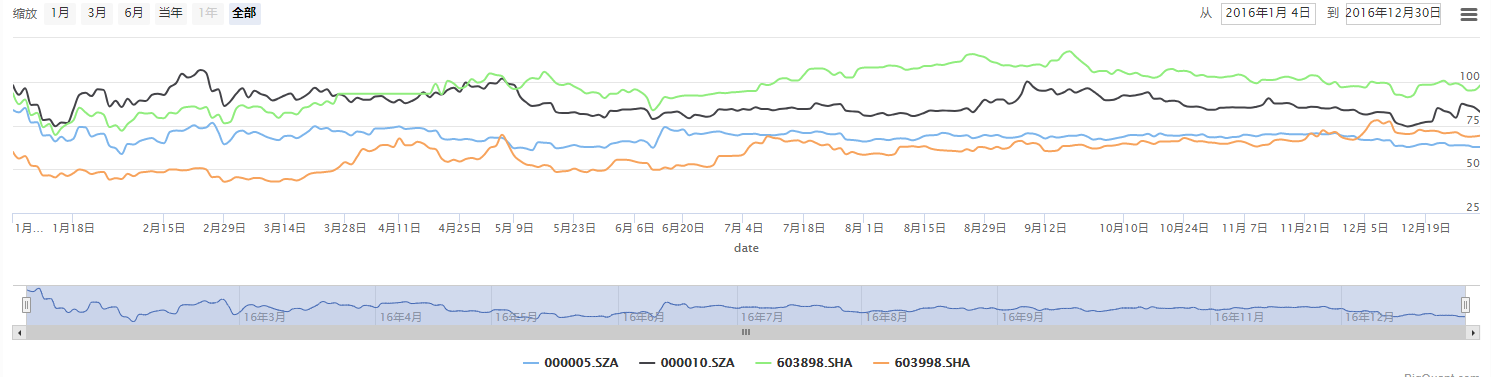

# 绘制多只股票走势图

data = D.history_data(['000005.SZA','000010.SZA','603998.SHA','603898.SHA'], start_date='2016-01-01', end_date='2017-01-01', fields=['close'],groupped_by_instrument=False)

data = pd.pivot_table(data,values='close',index=['date'],columns=['instrument'])

T.plot(data, chart_type='spline')